Difference between revisions of "KVM"

From Blue-IT.org Wiki

(→Migration from VirtualBox to KVM) |

m (→Using VirtualBox and KVM together) |

||

| (98 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

| + | == Preface == | ||

| + | As time of writing, I am using KVM on a Lenovo ThinkServer TS 430. The Machine uses an raid 5 array for the storage of the virtual machines. The Hypervisor is an Zentyal / Ubuntu 14.04 LTS distribution. It runs separately on a single harddisk. [[Zentyal]] makes the administration of network, bridges and firewall tasks a lot more easier than a bare Ubuntu system, but also adds some complexity to the system. | ||

| + | |||

| + | Concerning creating a good network topology. For the beginner, the following articles are a good starting point: | ||

| + | * [http://www.tuxradar.com/content/howto-linux-and-windows-virtualization-kvm-and-qemu#null Tuxradar Howto] | ||

| + | * [http://sandilands.info/sgordon/linux-servers-as-kvm-virtual-machines A detailed example with nice network topology diagram and iptables to get the big picture] | ||

| + | * [http://pic.dhe.ibm.com/infocenter/lnxinfo/v3r0m0/index.jsp?topic=%2Fliaat%2Fliaatkvmsecguest.htm IBM Dokumentation using KVM] | ||

| + | * [http://docs.openstack.org/image-guide/introduction.html Openstack - Virtualisation Platform] | ||

| + | |||

== Using VirtualBox and KVM together == | == Using VirtualBox and KVM together == | ||

| − | + | Many Tutorials say using VirtualBox and KVM together at the same server at the same time is NOT possible!!! | |

| + | * http://swaeku.github.io/blog/2013/04/04/run-kvm-and-virtualbox-together/ | ||

| − | * http:// | + | One says, it is possible: |

| + | * [http://www.dedoimedo.com/computers/kvm-virtualbox.html Using virtualbox and kvm] | ||

| + | |||

| + | Also see | ||

| + | |||

| + | * [https://help.ubuntu.com/community/KVM/FAQ Ubuntu community FAQ - KVM] | ||

| + | |||

| + | You don't have to uninstall either of them! But you have to choose the runtime: | ||

| − | Use VirtualBox | + | Use '''VirtualBox''' |

sudo service qemu-kvm stop | sudo service qemu-kvm stop | ||

sudo service vboxdrv start | sudo service vboxdrv start | ||

| − | OR use KVM | + | OR use '''KVM''' |

sudo service vboxdrv stop | sudo service vboxdrv stop | ||

sudo service qemu-kvm start | sudo service qemu-kvm start | ||

Decide! | Decide! | ||

| + | |||

| + | == Command line foo == | ||

| + | Prerequisites: | ||

| + | sudo apt-get install ubuntu-vm-builder | ||

| + | |||

| + | Show running machines | ||

| + | virsh -c qemu:///system list | ||

| + | |||

| + | Save and restart a machine (hibernate) | ||

| + | #!/bin/bash | ||

| + | VM=$1 | ||

| + | virsh save $VM /data/savedstate/$VM | ||

| + | virsh restore /data/savedstate/$VM | ||

| + | |||

| + | Show bridges | ||

| + | brctl show | ||

| + | |||

| + | Show iptables rules | ||

| + | watch -n2 iptables -nvL -t nat | ||

| + | |||

| + | Renaming the image of a virtual machine is not a trivial task. It is NOT ennough to edit the xml files in /etc/libvirt/quemu: | ||

| + | |||

| + | # [1] RENAME the image | ||

| + | mv /vms/my.img /vms_new_name.img | ||

| + | |||

| + | dom="my-domain" | ||

| + | virsh dumpxml $dom > ${dom}.xml | ||

| + | |||

| + | # [2] EDIT the image, so it matches your move from [1] | ||

| + | vim ${dom}.xml | ||

| + | |||

| + | virsh undefine ${dom} | ||

| + | virsh define ${dom}.xml | ||

| + | |||

| + | rm ${dom}.xml | ||

| + | |||

| + | == Convert an image == | ||

| + | |||

| + | A good starting point is THE stack for managing systems with virtual appliences [http://docs.openstack.org Openstack], which have an overall very good documentation about virtualisation systems: | ||

| + | |||

| + | * http://docs.openstack.org/image-guide/convert-images.html | ||

| + | * http://docs.openstack.org/image-guide/introduction.html | ||

| + | |||

| + | If you like to convert from e.g. vmdk (compressed) or vdi to qcow2, you have to go a two step way. You can use either ''qemu-img'' or ''VBoxManager'' for conversion tasks. | ||

| + | |||

| + | I highly recommend for the conversion to use .... | ||

| + | |||

| + | # '''two different drives''' for input and output image (HDD, SSD) | ||

| + | # and use a '''highspeed connection''' between those (USB 3.0, SATA) | ||

| + | |||

| + | ... because the files could get very big and the '''conversion speed almost depends on the speed of the used hardware.''' | ||

| + | |||

| + | === From vdi to qcow2 === | ||

| + | If you have an vdi image, you can convert directly to qcow2: | ||

| + | qemu-img convert -p -f vdi -O qcow2 'image.vdi' 'image.qcow2' | ||

| + | |||

| + | === From vmdk via raw to qcow2 === | ||

| + | ==== 1. From vmdk to raw ==== | ||

| + | This would be always the first step: | ||

| + | |||

| + | qemu-img convert -p -f vmdk -O raw original_image.vmdk raw_image.raw | ||

| + | (2.00/100%) | ||

| + | |||

| + | Tip: Using ''-p'' shows the progress "(2.00/100%)" in the converting process | ||

| + | |||

| + | ==== 2. From raw image to qcow2 ==== | ||

| + | |||

| + | Qcow(2) is the preferred format: | ||

| + | |||

| + | qemu-img convert -f vmdk -O qcow2 raw_image.raw original_image.qcow2 | ||

| + | (2.00/100%) | ||

| + | |||

| + | == Copy an image == | ||

| + | Copying an image is an easy task. The only thing to remember is to alter the uuid, the mac-address and the name of the virtual machine. If you are going to copy the machine to another libvirt instance, you should be aware of using the same names for storage space and e.g. virtual networks! | ||

| + | |||

| + | # Changing the uuid | ||

| + | # changing the mac-adress | ||

| + | # changing the name of the machine (if in the same environment) | ||

| + | |||

| + | # Stop the machine | ||

| + | virsh shutdown my.vm | ||

| + | |||

| + | # copy over the storage image | ||

| + | cp {my.vm,my-copy.vm}.img | ||

| + | |||

| + | # do an xml dump of the vm definition | ||

| + | virsh dumpxml my.vm > /tmp/my-new.xml | ||

| + | |||

| + | # libvirt assigns a new address! | ||

| + | sed -i /uuid/d /tmp/my-new.xml | ||

| + | sed -i '/mac address/d' /tmp/my-new.xml | ||

| + | |||

| + | # rename the vm | ||

| + | sed -i s/name_of_old_vm/new_name /tmp/my-new.xml | ||

| + | |||

| + | # copy over all files to a new system | ||

| + | # name of storage space and e.g. virtual networks must match! | ||

| + | # and create the new vm | ||

| + | virsh define /tmp/my-new.xml | ||

| + | |||

| + | == Mount qcow images with nbd == | ||

| + | Sometimes a qcow(2)-image does not start. There is an easy way to mount and recover such images with linux with the '''nbd kernel module (network block device)'''. | ||

| + | |||

| + | Lets assume, you like to check and repair an Windows 7 image, which normally has two ntfs partitions. | ||

| + | |||

| + | sudo su | ||

| + | modprobe nbd max_part=8 | ||

| + | qemu-nbd --connect=/dev/nbd0 windows7.qcow2 | ||

| + | |||

| + | Now we can handle the nbd device like a normal harddisk. | ||

| + | |||

| + | Then there will be the following output with e.g. cfdisk | ||

| + | |||

| + | cfdisk /dev/nbd0 | ||

| + | |||

| + | Name Flags Part Typ FS Type [Label] Size (MB) | ||

| + | |||

| + | Pri/Log Free Space 1,05 | ||

| + | nbd0p1 Boot Primary ntfs [System-reservier] 104,86 | ||

| + | nbd0p2 Primary ntfs 42842,72 | ||

| + | Pri/Log Free Space | ||

| + | |||

| + | You can mount the partitions ... | ||

| + | |||

| + | mount /dev/nbd0p1 MOUNTPOINT_P1 | ||

| + | mount /dev/nbd0p2 MOUNTPOINT_P2 | ||

| + | |||

| + | ... or check them with ntfsck or other tools. | ||

| + | |||

| + | ntfsck /dev/nbd0p1 | ||

| + | |||

| + | After work, disconnect the device: | ||

| + | |||

| + | qemu-nbd --disconnect /dev/nbd0 | ||

| + | |||

| + | == VirtIO== | ||

| + | VirtIO drivers speed up network an disk speed a lot fpor windows systems. They are not part of the official windows system. The easiest way is to download them from PORXMOX (Version < 173) | ||

| + | * https://pve.proxmox.com/wiki/Windows_VirtIO_Drivers | ||

| + | Also read: | ||

| + | * https://docs.fedoraproject.org/en-US/quick-docs/creating-windows-virtual-machines-using-virtio-drivers/index.html | ||

| + | |||

| + | Installation: | ||

| + | You can - if you downloaded the iso version greater than 173 the installer inside of windows. Therefore please be aware, that you cannot start a windows system configured for usage with virtio without virtio drivers installed!!! | ||

| + | |||

| + | == Spice == | ||

| + | |||

| + | This was tested with ubuntu 14.04 (emulator /usr/bin/kvm-spice). | ||

| + | --[[User:Apos|Apos]] ([[User talk:Apos|talk]]) 20:58, 17 April 2014 (CEST) | ||

| + | |||

| + | Enable '''spice''' for the type of display and '''qxl''' protocol for the video adapter. | ||

| + | |||

| + | Then be shure to have the following packages installed on client-side: | ||

| + | |||

| + | apt-get install python-spice-client-gtk spice-client-gtk virt-viewer virt-manager | ||

== Migration from VirtualBox to KVM == | == Migration from VirtualBox to KVM == | ||

This boils down to | This boils down to | ||

| + | # having a lot of time | ||

| + | # having a lot of free harddisk space | ||

# creating a clone of the vbox-machine with ''VBoxManage clonehd'' (this can take a looooong time!). Kloning is the easiest way of getting rid of snapshots of an existing virtual machine. | # creating a clone of the vbox-machine with ''VBoxManage clonehd'' (this can take a looooong time!). Kloning is the easiest way of getting rid of snapshots of an existing virtual machine. | ||

# converting the images from ''vdi'' to ''qcow''-format with ''qemu-img convert'' | # converting the images from ''vdi'' to ''qcow''-format with ''qemu-img convert'' | ||

| Line 23: | Line 196: | ||

* http://serverfault.com/questions/249944/how-to-export-a-specific-virtualbox-snapshot-as-a-raw-disk-image/249947#249947 | * http://serverfault.com/questions/249944/how-to-export-a-specific-virtualbox-snapshot-as-a-raw-disk-image/249947#249947 | ||

| − | + | To clone an image - on the same machine - you have to STOP kvm and start vboxdr (see above). Also be aware, that the raw-images take up a lot of space! | |

| + | |||

# The conversion can take some time. Other virtual machines are not accessible in this time | # The conversion can take some time. Other virtual machines are not accessible in this time | ||

VBoxManage clonehd -format RAW myOldVM.vdi /home/vm-exports/myNewVM.raw | VBoxManage clonehd -format RAW myOldVM.vdi /home/vm-exports/myNewVM.raw | ||

| Line 35: | Line 209: | ||

cd /to/the/SnapShot/dir | cd /to/the/SnapShot/dir | ||

VBoxManage clonehd -format RAW "SNAPSHOT_UUID" /home/vm-exports/myNewVM.raw | VBoxManage clonehd -format RAW "SNAPSHOT_UUID" /home/vm-exports/myNewVM.raw | ||

| + | |||

| + | |||

| + | When you start the virtual machine with plain kvm-qemu you will certainly get a BSOD. This can be circumvented starting the machine according to the following article: | ||

| + | |||

| + | * https://bbs.archlinux.org/viewtopic.php?id=194350 | ||

| + | |||

| + | ... with something like | ||

| + | |||

| + | qemu-system-x86_64 | ||

| + | ... | ||

| + | -machine q35,accel=kvm | ||

| + | -drive if=none,file=your.image | ||

| + | -device ahci,id=ahci | ||

| + | -device ide-drive,bus=ahci.0,drive=d1 | ||

| + | |||

| + | I by myself found out, using these settings in ''virt-manager'' works also very well. I also set | ||

| + | |||

| + | * audio to ''ac97'' and | ||

| + | * network to the ''e1000'' driver | ||

| + | |||

| + | Then I start the machine in safe mode with networking and install the [https://www.google.de/search?client=ubuntu&channel=fs&q=windows+virtio+drivers+networking+iso&ie=utf-8 virtio drivers for networking from linux-kvm.org] and the [http://www.spice-space.org/download.html spice video guest drivers and qxl drivers from spice-space.org]. | ||

| + | |||

| + | == Accessing a - via Zentyal configured - bridged machine == | ||

| + | On ubuntu 12.04 there is a [https://bugs.launchpad.net/ubuntu/+source/procps/+bug/50093 Ubuntu bug #50093] (mentioned [http://wiki.libvirt.org/page/Networking#Debian.2FUbuntu_Bridging here]) which prevents accessing a machine inside the bridges network: | ||

| + | |||

| + | > vim /etc/sysctl.conf | ||

| + | net.bridge.bridge-nf-call-ip6tables = 0 | ||

| + | net.bridge.bridge-nf-call-iptables = 0 | ||

| + | net.bridge.bridge-nf-call-arptables = 0 | ||

| + | |||

| + | Acitvate | ||

| + | sysctl -p /etc/sysctl.conf | ||

| + | |||

| + | Make permanent | ||

| + | > vim /etc/rc.local | ||

| + | *** Sample rc.local file *** | ||

| + | /sbin/sysctl -p /etc/sysctl.conf | ||

| + | iptables -A FORWARD -p tcp --tcp-flags SYN,RST SYN -j TCPMSS --clamp-mss-to-pmtu | ||

| + | exit 0 | ||

| + | |||

| + | Verify | ||

| + | tail /proc/sys/net/bridge/* | ||

| + | iptables -L FORWARD | ||

| + | |||

| + | > brctl show | ||

| + | bridge name bridge id STP enabled interfaces | ||

| + | br1 8000.50e5492d616d no eth1 | ||

| + | vnet1 | ||

| + | [...] | ||

== Accessing services on KVM guests behind a NAT == | == Accessing services on KVM guests behind a NAT == | ||

| + | === Preface === | ||

| + | Be careful. By doing this, you open up ports to the outside world. If you are using pfSense in front of your host or another firewall you can simply restrict this by using VPN. | ||

| + | |||

| + | Access from internet to guest: | ||

| + | internet -> pfSense (WAN / host ip and port) | ||

| + | -> host port -> iptables -> nat bridge -> guest port | ||

| + | |||

| + | Access only via vpn to guest | ||

| + | internet -> pfSense (OpenVPN / host ip and port) | ||

| + | -> host port -> iptables -> nat bridge -> guest port | ||

| + | |||

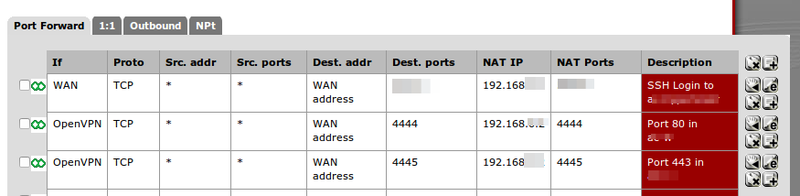

| + | [[File:Pfsense port forward kvm nat bridge.png|800px]] | ||

| + | |||

| + | === The qemu hook === | ||

| + | This is done by editing a hook-script for quemu: | ||

| + | /etc/libvirt/hooks/qemu | ||

| + | |||

| + | This script can be literally anything. A bash script, a python script, whatever | ||

| + | |||

| + | It looks like this | ||

| + | vim /etc/libvirt/hooks/qemu | ||

| + | |||

| + | #!/bin/bash | ||

| + | run_another_sript param1 param2 | ||

| + | |||

| + | |||

| + | Please look at [[KVM#qemu_hook_script]] section for an example using this to configure your own iptable rules for your machines. | ||

| + | |||

I am referring to this article: | I am referring to this article: | ||

* http://systemdilettante.blogspot.de/2013/06/accessing-services-on-kvm-guests-behind.html | * http://systemdilettante.blogspot.de/2013/06/accessing-services-on-kvm-guests-behind.html | ||

| − | which | + | which is mentioned in the libvirt wiki: |

* http://wiki.libvirt.org/page/Networking#Guest_configuration | * http://wiki.libvirt.org/page/Networking#Guest_configuration | ||

| − | + | === Control NAT rules === | |

| + | IpTables is what you want. But there are some pitfalls: | ||

| + | |||

| + | # the prerouting rules, that enable a port forwarding into the nat'ed machine must be applied before (!) the virtual machine starts | ||

| + | # if you have a service installed like zentyal, or you are restarting your firewall, all rules are set back | ||

| + | # libvirt nat-rules for the bridges are applied at service start time - this can interfere with other rules | ||

| + | # This is done by a quemu-hook script, called '''/etc/libvirt | ||

| + | |||

| + | On [[Zentyal]], there are other pitfalls. Please [[Zentyal read this]]. | ||

| + | |||

| + | === An example === | ||

| + | The PREROUTING rules vor '''vm-1''' open up the ports 25, 587, 993 and 8080 for the NAT'ed virtual machine with the IP 192.168.122.2. So they are accessible from the outside world (webserver, e-mail-server, ...). This also means, that they can not be used any more in the host sytem (you should set the admin interface of e.g. zentyal to a different port). | ||

| + | |||

| + | he POSTROUTING chains are set automatically by virt-manager and allow the virtual-machine accessing the internet from inside of the machine using NAT. | ||

| + | |||

| + | iptables -nvL -t nat [--line-number] | ||

| + | |||

| + | Then you should see something like the following. | ||

| + | |||

| + | |||

| + | root@myHost:# iptables -nvL -t nat | ||

| + | Chain PREROUTING (policy ACCEPT 216 packets, 14658 bytes) | ||

| + | pkts bytes target prot opt in out source destination | ||

| + | 6 312 DNAT tcp -- * * 0.0.0.0/0 0.0.0.0/0 tcp dpt:1222 to:192.168.122.2:80 | ||

| + | 2 120 DNAT tcp -- * * 0.0.0.0/0 0.0.0.0/0 tcp dpt:1223 to:192.168.122.2:443 | ||

| + | 0 0 DNAT tcp -- * * 0.0.0.0/0 0.0.0.0/0 tcp dpt:1444 to:192.168.122.2:8080 | ||

| + | |||

| + | Chain INPUT (policy ACCEPT 14 packets, 2628 bytes) | ||

| + | pkts bytes target prot opt in out source destination | ||

| + | |||

| + | Chain OUTPUT (policy ACCEPT 12 packets, 818 bytes) | ||

| + | pkts bytes target prot opt in out source destination | ||

| + | |||

| + | Chain POSTROUTING (policy ACCEPT 17 packets, 1048 bytes) | ||

| + | pkts bytes target prot opt in out source destination | ||

| + | 0 0 MASQUERADE tcp -- * * 192.168.122.0/24 !192.168.122.0/24 masq ports: 1024-65535 | ||

| + | 6 406 MASQUERADE udp -- * * 192.168.122.0/24 !192.168.122.0/24 masq ports: 1024-65535 | ||

| + | 0 0 MASQUERADE all -- * * 192.168.122.0/24 !192.168.122.0/24 | ||

| + | |||

| + | === Qemu hook script === | ||

| + | |||

| + | A script from [http://www.jimscode.ca/index.php/component/content/article/19-linux/142-linux-port-forwarding-to-guest-libvirt-vms here] is a little bit altered: | ||

| + | * Guest port same as host port | ||

| + | * ability to apply more than one port | ||

| + | * ability to serve more than one guest | ||

| + | * you can not distinguish between inside and outside port - not yet ;) | ||

| + | * ... will be updated! | ||

| + | |||

| + | '''Prerequisites''': On a (kvm)-host with the IP 192.168.0.10, a natted virtual kvm network bridge with the network 192.168.122.0/24 was created with e.g. '''virt-manager'''. | ||

| + | |||

| + | If your virtual server has the ip 192.168.122.2/24 - in our example here the '''vm-webserver''' - this machine must be applied with virt-manager to the natted-bridge 192.168.122.0/24. | ||

| + | |||

| + | Inside of the machine you have to apply the gateway 192.168.122.1 of the bridge. Then, and only then you can reach this machine (and ports) with the following script using your '''hosts (!!!) ip''' 192.168.0.10. | ||

| + | |||

| + | The port 443 of your webserver on 192.168.122.2 can be reached from outside with 192.168.0.10:443. | ||

| + | |||

| + | If there is a firewall in front of the kvm-host-machine, you will forward the ports to exacly this address ( 192.168.0.10:443) to reach your https-website inside the vm-webserver with the ip 192.168.122.2. | ||

| + | |||

| + | Everything is handeled by the gateway of the bridge 192.168.122.1 and the nat rules you apply at start time. | ||

| + | |||

| + | Capiche? | ||

| + | |||

| + | > script="/etc/libvirt/hooks/qemu"; \ | ||

| + | touch $script; \ | ||

| + | chmod +x $script; \ | ||

| + | vim $script | ||

| + | |||

| + | The script runs another script which first removes all custom iptable-rules twice, then re-adds them to the system. | ||

| + | |||

| + | #!/bin/bash | ||

| + | /usr/local/bin/set_iptables_all_guest remove | ||

| + | sleep 1 | ||

| + | /usr/local/bin/set_iptables_all_guest remove | ||

| + | sleep 1 | ||

| + | |||

| + | /usr/local/bin/set_iptables_all_guest add | ||

| + | |||

| + | === Set iptables script for guests (used by qemu hook) === | ||

| + | |||

| + | Use this following script for central administration of your iptable rule settings for each machine. | ||

| + | |||

| + | This is handy, because you can test everything manually like this: | ||

| + | set_iptables_all_guests add | ||

| + | set_iptables_all_guests remove | ||

| + | |||

| + | Let's go: | ||

| + | vim /usr/local/bin/set_iptables_all_guests | ||

| + | |||

| + | #!/bin/bash | ||

| + | |||

| + | del_prerouting() { | ||

| + | iptables -t nat -D PREROUTING -p tcp --dport ${1} -j DNAT --to ${2}:${3} | ||

| + | } | ||

| + | |||

| + | del_forward() { | ||

| + | iptables -D FORWARD -d ${1}/32 -p tcp -m state --state NEW -m tcp --dport ${2} -j ACCEPT | ||

| + | } | ||

| + | |||

| + | del_output() { | ||

| + | #- allows port forwarding from localhost but | ||

| + | # only if you use the ip (e.g http://192.168.1.20:8888/) | ||

| + | iptables -t nat -D OUTPUT -p tcp -o lo --dport ${1} -j DNAT --to ${2}:${1} | ||

| + | } | ||

| + | |||

| + | add_prerouting() { | ||

| + | iptables -t nat -A PREROUTING -p tcp --dport ${1} -j DNAT --to ${2}:${3} | ||

| + | } | ||

| + | |||

| + | add_forward() { | ||

| + | iptables -I FORWARD -d ${1}/32 -p tcp -m state --state NEW -m tcp --dport ${2} -j ACCEPT | ||

| + | } | ||

| + | |||

| + | add_output() { | ||

| + | #- allows port forwarding from localhost but | ||

| + | # only if you use the ip (e.g http://192.168.1.20:8888/) | ||

| + | iptables -t nat -I OUTPUT -p tcp -o lo --dport ${1} -j DNAT --to ${2}:${1} | ||

| + | } | ||

| + | |||

| + | |||

| + | ############################################### | ||

| + | # ONLY EDIT HERE | ||

| + | Guest_name=vm-email | ||

| + | # the admin interface via vpn and ports 4444 and 4445 | ||

| + | ############################################### | ||

| + | |||

| + | if [ "${1}" = "${Guest_name}" ]; then | ||

| + | |||

| + | ############################################### | ||

| + | # ONLY EDIT HERE | ||

| + | Guest_ipaddr=192.168.11.2 | ||

| + | Host_port=( '4444' '4445' '993' '587' '25' '465' '143' ) | ||

| + | Guest_port=( '80' '443' '993' '587' '25' '465' '143' ) | ||

| + | ############################################### | ||

| + | |||

| + | length=$(( ${#Host_port[@]} - 1 )) | ||

| + | |||

| + | if [ "${2}" = "stopped" -o "${2}" = "reconnect" ]; then | ||

| + | for i in $(seq 0 $length); do | ||

| + | del_prerouting ${Host_port[$i]} ${Guest_ipaddr} ${Guest_port[$i]} | ||

| + | del_forward ${Guest_ipaddr} ${Guest_port[$i]} | ||

| + | #- allows port forwarding from localhost but | ||

| + | # only if you use the ip (e.g http://192.168.1.20:8888/) | ||

| + | del_output ${Host_port[$i]} ${Guest_ipaddr} | ||

| + | done | ||

| + | fi | ||

| + | |||

| + | if [ "${2}" = "start" -o "${2}" = "reconnect" ]; then | ||

| + | for i in `seq 0 $length`; do | ||

| + | add_prerouting ${Host_port[$i]} ${Guest_ipaddr} ${Guest_port[$i]} | ||

| + | add_forward ${Guest_ipaddr} ${Guest_port[$i]} | ||

| + | #- allows port forwarding from localhost but | ||

| + | # only if you use the ip (e.g http://192.168.1.20:8888/) | ||

| + | add_output ${Host_port[$i]} ${Guest_ipaddr} | ||

| + | done | ||

| + | fi | ||

| + | |||

| + | fi | ||

| + | |||

| + | ############################################### | ||

| + | # ONLY EDIT HERE | ||

| + | Guest_name=vm-webserver | ||

| + | ############################################### | ||

| + | |||

| + | if [ "${1}" = "${Guest_name}" ]; then | ||

| + | |||

| + | ############################################### | ||

| + | # ONLY EDIT HERE | ||

| + | Guest_ipaddr=192.168.33.2 | ||

| + | Host_port=( '80' '443' '8080' ) | ||

| + | Guest_port=( '80' '443' '8080' ) | ||

| + | ############################################### | ||

| + | |||

| + | length=$(( ${#Host_port[@]} - 1 )) | ||

| + | |||

| + | if [ "${2}" = "stopped" -o "${2}" = "reconnect" ]; then | ||

| + | for i in $(seq 0 $length); do | ||

| + | del_prerouting ${Host_port[$i]} ${Guest_ipaddr} ${Guest_port[$i]} | ||

| + | del_forward ${Guest_ipaddr} ${Guest_port[$i]} | ||

| + | #- allows port forwarding from localhost but | ||

| + | # only if you use the ip (e.g http://192.168.1.20:8888/) | ||

| + | del_output ${Host_port[$i]} ${Guest_ipaddr} | ||

| + | done | ||

| + | fi | ||

| + | |||

| + | if [ "${2}" = "start" -o "${2}" = "reconnect" ]; then | ||

| + | for i in `seq 0 $length`; do | ||

| + | add_prerouting ${Host_port[$i]} ${Guest_ipaddr} ${Guest_port[$i]} | ||

| + | add_forward ${Guest_ipaddr} ${Guest_port[$i]} | ||

| + | #- allows port forwarding from localhost but | ||

| + | # only if you use the ip (e.g http://192.168.1.20:8888/) | ||

| + | add_output ${Host_port[$i]} ${Guest_ipaddr} | ||

| + | done | ||

| + | fi | ||

| + | |||

| + | fi | ||

| + | |||

| + | === Troubleshooting Zentyal === | ||

| + | Everytime you will alter your network setting in e.g. zentyal and thereby resetting your nat rules, you will need to | ||

| + | # shutdown or save the virtual machines | ||

| + | # restard libvirtd | ||

| + | # import sysctl settings | ||

| + | # reapply all iptables settings with an '''extra script''' | ||

| + | |||

| + | '''Therfore use the script mentioned in the [[NAT]] section. There use the given bash script for setting ip-tables.''' | ||

| + | |||

| + | You can use hooks to do this: | ||

| + | * http://doc.zentyal.org/en/appendix-c.html | ||

| + | |||

| + | touch /etc/zentyal/hooks/firewall.postservice | ||

| + | sudo chmod +x firewall.postservice | ||

| + | |||

| + | #vim /etc/zentyal/hooks/firewall.postservice | ||

| + | |||

| + | /bin/bash /home/administrator/bin/kvm/set_iptables_all_guests remove | ||

| + | sleep 1 | ||

| + | /bin/bash /home/administrator/bin/kvm/set_iptables_all_guests add | ||

| + | |||

| + | Then everytime your firewall is restarted (which is the case for network module too!), your [[NAT]] settings will be applied. | ||

| + | sudo service zentyal firewall restart | ||

| + | |||

| + | |||

| + | |||

| + | === Restarting libvirt manually === | ||

| + | Resting libvirt and setting all things right is done manually with the following script. | ||

| + | |||

| + | #!/bin/bash | ||

| + | |||

| + | [ $UID==0 ] || echo "Only run as root" | ||

| + | [ $UID==0 ] || exit 1 | ||

| + | |||

| + | echo "#############################################################" | ||

| + | echo "## IPTABLES NAT" | ||

| + | iptables -nvL -t nat | ||

| + | |||

| + | /etc/init.d/libvirt-bin restart | ||

| + | sleep 15 | ||

| + | |||

| + | /home/administrator/bin/kvm/set_iptables_all_guests remove | ||

| + | /home/administrator/bin/kvm/set_iptables_all_guests remove | ||

| + | /home/administrator/bin/kvm/set_iptables_all_guests add | ||

| + | sleep 15 | ||

| + | |||

| + | /sbin/sysctl -p /etc/sysctl.conf | ||

| + | iptables -A FORWARD -p tcp --tcp-flags SYN,RST SYN -j TCPMSS --clamp-mss-to-pmtu | ||

| + | sleep 15 | ||

| + | |||

| + | echo "#############################################################" | ||

| + | echo "## IPTABLES NAT" | ||

| + | iptables -nvL -t nat | ||

| + | |||

| + | === NAT rules === | ||

| + | View the nat rules with line-numbers: | ||

| + | iptables -nvL -t nat --line-number | ||

| + | |||

| + | Delete the PREROUTING rule with the line-number one: | ||

| + | iptables -t nat -D PREROUTING 1 | ||

| + | |||

| + | To reach the port inside the port 443 ('''<port_of_vm>''' ) inside of the virtual machine with a certain ip ('''<ip_vm>'''), do: | ||

| + | iptables -t nat -I PREROUTING -d 0.0.0.0/0 -p tcp --dport <port_of_vm> -j DNAT --to-destination <ip_vm> | ||

| + | |||

| + | Where "1" is the first PREROUTING rule that appears with the above command. | ||

| + | |||

| + | In the above example the first line is the PREROUTING chain with the number "6" and the port 80. | ||

| + | This is the FIRST rule. | ||

| + | |||

| + | Another example is to get port 80 traffic running out of a bridged virtual machine with the bridge network 192.168.21.0/24: | ||

| + | iptables -t nat -I premodules -s 192.168.21.0/24 -p tcp -m tcp --dport 80 -j ACCEPT | ||

| + | iptables -t nat -D premodules -s 192.168.21.0/24 -p tcp --dport 80 -j ACCEPT | ||

| + | |||

| + | List all rules: | ||

| + | iptables-save | ||

| + | |||

| + | Restore from a file or stdin: | ||

| + | iptables-restore | ||

| + | |||

| + | Restore filtered and commented rules: | ||

| + | comment="my special comment for this test rule" | ||

| + | iptables -A ..... -m comment --comment "${comment}" -j REQUIRED_ACTION | ||

| + | iptables-save | grep -v "${comment}" | iptables-restore | ||

| + | |||

| + | === Bridge === | ||

| + | Sometimes it is necessary to remove a bridge. | ||

| + | |||

| + | Remove all network interfaces from the bridge: | ||

| + | brctl delif brX ethX | ||

| + | |||

| + | Bring teh bridge down: | ||

| + | ifconfig brX down | ||

| + | |||

| + | Remove the bridge: | ||

| + | brctl delbr brX | ||

| + | |||

| + | == Autostart at boot time == | ||

| + | Set the 'autostart' flag so the domain is started upon boot time: | ||

| + | virsh autostart myMachine | ||

| + | |||

| + | == Shutdown == | ||

| + | On Ubuntu 12.04 LTS (Precise Pangolin) the shutdown scripts already take care of stopping the virtual machines (at least in the newest version of the libvirt-bin package). However, by default the script will only wait 30 seconds for the VMs to shutdown. Depending on the services running in the VM, this can be too short. | ||

| + | |||

| + | In this case, you have to create a file '''/etc/init/libvirt-bin.override''' with the following content: | ||

| + | |||

| + | > vim /etc/init/libvirt-bin.override | ||

| + | # extend wait time for vms to shut down to 4 minutes | ||

| + | env libvirtd_shutdown_timeout=240 | ||

| + | |||

| + | == Backup KVM == | ||

| + | |||

| + | Via LVM | ||

| + | * http://pof.eslack.org/2010/12/23/best-solution-to-fully-backup-kvm-virtual-machines | ||

| + | * http://sandilands.info/sgordon/automatic-backup-of-running-kvm-virtual-machines | ||

| + | |||

| + | LiveBackup (under development - --[[User:Apos|Apos]] ([[User talk:Apos|talk]]) 18:07, 30 October 2013 (CET)) | ||

| + | * http://wiki.qemu.org/Features/Livebackup | ||

| − | + | [[Category:Virtualisation]] | |

| − | + | [[Category:Network]] | |

| − | + | [[Category:KVM]] | |

| + | [[Category:NAT]] | ||

| + | [[Category:Zentyal]] | ||

Latest revision as of 17:08, 24 June 2020

Contents

[hide]- 1 Preface

- 2 Using VirtualBox and KVM together

- 3 Command line foo

- 4 Convert an image

- 5 Copy an image

- 6 Mount qcow images with nbd

- 7 VirtIO

- 8 Spice

- 9 Migration from VirtualBox to KVM

- 10 Accessing a - via Zentyal configured - bridged machine

- 11 Accessing services on KVM guests behind a NAT

- 12 Autostart at boot time

- 13 Shutdown

- 14 Backup KVM

Preface

As time of writing, I am using KVM on a Lenovo ThinkServer TS 430. The Machine uses an raid 5 array for the storage of the virtual machines. The Hypervisor is an Zentyal / Ubuntu 14.04 LTS distribution. It runs separately on a single harddisk. Zentyal makes the administration of network, bridges and firewall tasks a lot more easier than a bare Ubuntu system, but also adds some complexity to the system.

Concerning creating a good network topology. For the beginner, the following articles are a good starting point:

- Tuxradar Howto

- A detailed example with nice network topology diagram and iptables to get the big picture

- IBM Dokumentation using KVM

- Openstack - Virtualisation Platform

Using VirtualBox and KVM together

Many Tutorials say using VirtualBox and KVM together at the same server at the same time is NOT possible!!!

One says, it is possible:

Also see

You don't have to uninstall either of them! But you have to choose the runtime:

Use VirtualBox

sudo service qemu-kvm stop sudo service vboxdrv start

OR use KVM

sudo service vboxdrv stop sudo service qemu-kvm start

Decide!

Command line foo

Prerequisites:

sudo apt-get install ubuntu-vm-builder

Show running machines

virsh -c qemu:///system list

Save and restart a machine (hibernate)

#!/bin/bash VM=$1 virsh save $VM /data/savedstate/$VM virsh restore /data/savedstate/$VM

Show bridges

brctl show

Show iptables rules

watch -n2 iptables -nvL -t nat

Renaming the image of a virtual machine is not a trivial task. It is NOT ennough to edit the xml files in /etc/libvirt/quemu:

# [1] RENAME the image

mv /vms/my.img /vms_new_name.img

dom="my-domain"

virsh dumpxml $dom > ${dom}.xml

# [2] EDIT the image, so it matches your move from [1]

vim ${dom}.xml

virsh undefine ${dom}

virsh define ${dom}.xml

rm ${dom}.xml

Convert an image

A good starting point is THE stack for managing systems with virtual appliences Openstack, which have an overall very good documentation about virtualisation systems:

- http://docs.openstack.org/image-guide/convert-images.html

- http://docs.openstack.org/image-guide/introduction.html

If you like to convert from e.g. vmdk (compressed) or vdi to qcow2, you have to go a two step way. You can use either qemu-img or VBoxManager for conversion tasks.

I highly recommend for the conversion to use ....

- two different drives for input and output image (HDD, SSD)

- and use a highspeed connection between those (USB 3.0, SATA)

... because the files could get very big and the conversion speed almost depends on the speed of the used hardware.

From vdi to qcow2

If you have an vdi image, you can convert directly to qcow2:

qemu-img convert -p -f vdi -O qcow2 'image.vdi' 'image.qcow2'

From vmdk via raw to qcow2

1. From vmdk to raw

This would be always the first step:

qemu-img convert -p -f vmdk -O raw original_image.vmdk raw_image.raw

(2.00/100%)

Tip: Using -p shows the progress "(2.00/100%)" in the converting process

2. From raw image to qcow2

Qcow(2) is the preferred format:

qemu-img convert -f vmdk -O qcow2 raw_image.raw original_image.qcow2

(2.00/100%)

Copy an image

Copying an image is an easy task. The only thing to remember is to alter the uuid, the mac-address and the name of the virtual machine. If you are going to copy the machine to another libvirt instance, you should be aware of using the same names for storage space and e.g. virtual networks!

- Changing the uuid

- changing the mac-adress

- changing the name of the machine (if in the same environment)

# Stop the machine virsh shutdown my.vm

# copy over the storage image

cp {my.vm,my-copy.vm}.img

# do an xml dump of the vm definition

virsh dumpxml my.vm > /tmp/my-new.xml

# libvirt assigns a new address!

sed -i /uuid/d /tmp/my-new.xml

sed -i '/mac address/d' /tmp/my-new.xml

# rename the vm

sed -i s/name_of_old_vm/new_name /tmp/my-new.xml

# copy over all files to a new system

# name of storage space and e.g. virtual networks must match!

# and create the new vm

virsh define /tmp/my-new.xml

Mount qcow images with nbd

Sometimes a qcow(2)-image does not start. There is an easy way to mount and recover such images with linux with the nbd kernel module (network block device).

Lets assume, you like to check and repair an Windows 7 image, which normally has two ntfs partitions.

sudo su modprobe nbd max_part=8 qemu-nbd --connect=/dev/nbd0 windows7.qcow2

Now we can handle the nbd device like a normal harddisk.

Then there will be the following output with e.g. cfdisk

cfdisk /dev/nbd0

Name Flags Part Typ FS Type [Label] Size (MB)

Pri/Log Free Space 1,05

nbd0p1 Boot Primary ntfs [System-reservier] 104,86

nbd0p2 Primary ntfs 42842,72

Pri/Log Free Space

You can mount the partitions ...

mount /dev/nbd0p1 MOUNTPOINT_P1 mount /dev/nbd0p2 MOUNTPOINT_P2

... or check them with ntfsck or other tools.

ntfsck /dev/nbd0p1

After work, disconnect the device:

qemu-nbd --disconnect /dev/nbd0

VirtIO

VirtIO drivers speed up network an disk speed a lot fpor windows systems. They are not part of the official windows system. The easiest way is to download them from PORXMOX (Version < 173)

Also read:

Installation: You can - if you downloaded the iso version greater than 173 the installer inside of windows. Therefore please be aware, that you cannot start a windows system configured for usage with virtio without virtio drivers installed!!!

Spice

This was tested with ubuntu 14.04 (emulator /usr/bin/kvm-spice). --Apos (talk) 20:58, 17 April 2014 (CEST)

Enable spice for the type of display and qxl protocol for the video adapter.

Then be shure to have the following packages installed on client-side:

apt-get install python-spice-client-gtk spice-client-gtk virt-viewer virt-manager

Migration from VirtualBox to KVM

This boils down to

- having a lot of time

- having a lot of free harddisk space

- creating a clone of the vbox-machine with VBoxManage clonehd (this can take a looooong time!). Kloning is the easiest way of getting rid of snapshots of an existing virtual machine.

- converting the images from vdi to qcow-format with qemu-img convert

- creating and configuring a new kvm-guest

- adding some fou to NAT with a qemu-hook (see next section)

To clone an image - on the same machine - you have to STOP kvm and start vboxdr (see above). Also be aware, that the raw-images take up a lot of space!

# The conversion can take some time. Other virtual machines are not accessible in this time VBoxManage clonehd -format RAW myOldVM.vdi /home/vm-exports/myNewVM.raw 0%...

cd /home/vm-exports/ qemu-img convert -f raw myNewVM.raw -O qcow2 myNewVM.qcow

Cloning a Snapshot:

# for a snapshot do (not tested) cd /to/the/SnapShot/dir VBoxManage clonehd -format RAW "SNAPSHOT_UUID" /home/vm-exports/myNewVM.raw

When you start the virtual machine with plain kvm-qemu you will certainly get a BSOD. This can be circumvented starting the machine according to the following article:

... with something like

qemu-system-x86_64 ... -machine q35,accel=kvm -drive if=none,file=your.image -device ahci,id=ahci -device ide-drive,bus=ahci.0,drive=d1

I by myself found out, using these settings in virt-manager works also very well. I also set

- audio to ac97 and

- network to the e1000 driver

Then I start the machine in safe mode with networking and install the virtio drivers for networking from linux-kvm.org and the spice video guest drivers and qxl drivers from spice-space.org.

Accessing a - via Zentyal configured - bridged machine

On ubuntu 12.04 there is a Ubuntu bug #50093 (mentioned here) which prevents accessing a machine inside the bridges network:

> vim /etc/sysctl.conf net.bridge.bridge-nf-call-ip6tables = 0 net.bridge.bridge-nf-call-iptables = 0 net.bridge.bridge-nf-call-arptables = 0

Acitvate

sysctl -p /etc/sysctl.conf

Make permanent

> vim /etc/rc.local *** Sample rc.local file *** /sbin/sysctl -p /etc/sysctl.conf iptables -A FORWARD -p tcp --tcp-flags SYN,RST SYN -j TCPMSS --clamp-mss-to-pmtu exit 0

Verify

tail /proc/sys/net/bridge/*

iptables -L FORWARD

> brctl show

bridge name bridge id STP enabled interfaces

br1 8000.50e5492d616d no eth1

vnet1

[...]

Accessing services on KVM guests behind a NAT

Preface

Be careful. By doing this, you open up ports to the outside world. If you are using pfSense in front of your host or another firewall you can simply restrict this by using VPN.

Access from internet to guest:

internet -> pfSense (WAN / host ip and port)

-> host port -> iptables -> nat bridge -> guest port

Access only via vpn to guest

internet -> pfSense (OpenVPN / host ip and port)

-> host port -> iptables -> nat bridge -> guest port

The qemu hook

This is done by editing a hook-script for quemu:

/etc/libvirt/hooks/qemu

This script can be literally anything. A bash script, a python script, whatever

It looks like this

vim /etc/libvirt/hooks/qemu

#!/bin/bash run_another_sript param1 param2

Please look at KVM#qemu_hook_script section for an example using this to configure your own iptable rules for your machines.

I am referring to this article:

which is mentioned in the libvirt wiki:

Control NAT rules

IpTables is what you want. But there are some pitfalls:

- the prerouting rules, that enable a port forwarding into the nat'ed machine must be applied before (!) the virtual machine starts

- if you have a service installed like zentyal, or you are restarting your firewall, all rules are set back

- libvirt nat-rules for the bridges are applied at service start time - this can interfere with other rules

- This is done by a quemu-hook script, called /etc/libvirt

On Zentyal, there are other pitfalls. Please Zentyal read this.

An example

The PREROUTING rules vor vm-1 open up the ports 25, 587, 993 and 8080 for the NAT'ed virtual machine with the IP 192.168.122.2. So they are accessible from the outside world (webserver, e-mail-server, ...). This also means, that they can not be used any more in the host sytem (you should set the admin interface of e.g. zentyal to a different port).

he POSTROUTING chains are set automatically by virt-manager and allow the virtual-machine accessing the internet from inside of the machine using NAT.

iptables -nvL -t nat [--line-number]

Then you should see something like the following.

root@myHost:# iptables -nvL -t nat Chain PREROUTING (policy ACCEPT 216 packets, 14658 bytes) pkts bytes target prot opt in out source destination 6 312 DNAT tcp -- * * 0.0.0.0/0 0.0.0.0/0 tcp dpt:1222 to:192.168.122.2:80 2 120 DNAT tcp -- * * 0.0.0.0/0 0.0.0.0/0 tcp dpt:1223 to:192.168.122.2:443 0 0 DNAT tcp -- * * 0.0.0.0/0 0.0.0.0/0 tcp dpt:1444 to:192.168.122.2:8080 Chain INPUT (policy ACCEPT 14 packets, 2628 bytes) pkts bytes target prot opt in out source destination Chain OUTPUT (policy ACCEPT 12 packets, 818 bytes) pkts bytes target prot opt in out source destination Chain POSTROUTING (policy ACCEPT 17 packets, 1048 bytes) pkts bytes target prot opt in out source destination 0 0 MASQUERADE tcp -- * * 192.168.122.0/24 !192.168.122.0/24 masq ports: 1024-65535 6 406 MASQUERADE udp -- * * 192.168.122.0/24 !192.168.122.0/24 masq ports: 1024-65535 0 0 MASQUERADE all -- * * 192.168.122.0/24 !192.168.122.0/24

Qemu hook script

A script from here is a little bit altered:

- Guest port same as host port

- ability to apply more than one port

- ability to serve more than one guest

- you can not distinguish between inside and outside port - not yet ;)

- ... will be updated!

Prerequisites: On a (kvm)-host with the IP 192.168.0.10, a natted virtual kvm network bridge with the network 192.168.122.0/24 was created with e.g. virt-manager.

If your virtual server has the ip 192.168.122.2/24 - in our example here the vm-webserver - this machine must be applied with virt-manager to the natted-bridge 192.168.122.0/24.

Inside of the machine you have to apply the gateway 192.168.122.1 of the bridge. Then, and only then you can reach this machine (and ports) with the following script using your hosts (!!!) ip 192.168.0.10.

The port 443 of your webserver on 192.168.122.2 can be reached from outside with 192.168.0.10:443.

If there is a firewall in front of the kvm-host-machine, you will forward the ports to exacly this address ( 192.168.0.10:443) to reach your https-website inside the vm-webserver with the ip 192.168.122.2.

Everything is handeled by the gateway of the bridge 192.168.122.1 and the nat rules you apply at start time.

Capiche?

> script="/etc/libvirt/hooks/qemu"; \ touch $script; \ chmod +x $script; \ vim $script

The script runs another script which first removes all custom iptable-rules twice, then re-adds them to the system.

#!/bin/bash /usr/local/bin/set_iptables_all_guest remove sleep 1 /usr/local/bin/set_iptables_all_guest remove sleep 1 /usr/local/bin/set_iptables_all_guest add

Set iptables script for guests (used by qemu hook)

Use this following script for central administration of your iptable rule settings for each machine.

This is handy, because you can test everything manually like this:

set_iptables_all_guests add set_iptables_all_guests remove

Let's go:

vim /usr/local/bin/set_iptables_all_guests

#!/bin/bash

del_prerouting() {

iptables -t nat -D PREROUTING -p tcp --dport ${1} -j DNAT --to ${2}:${3}

}

del_forward() {

iptables -D FORWARD -d ${1}/32 -p tcp -m state --state NEW -m tcp --dport ${2} -j ACCEPT

}

del_output() {

#- allows port forwarding from localhost but

# only if you use the ip (e.g http://192.168.1.20:8888/)

iptables -t nat -D OUTPUT -p tcp -o lo --dport ${1} -j DNAT --to ${2}:${1}

}

add_prerouting() {

iptables -t nat -A PREROUTING -p tcp --dport ${1} -j DNAT --to ${2}:${3}

}

add_forward() {

iptables -I FORWARD -d ${1}/32 -p tcp -m state --state NEW -m tcp --dport ${2} -j ACCEPT

}

add_output() {

#- allows port forwarding from localhost but

# only if you use the ip (e.g http://192.168.1.20:8888/)

iptables -t nat -I OUTPUT -p tcp -o lo --dport ${1} -j DNAT --to ${2}:${1}

}

###############################################

# ONLY EDIT HERE

Guest_name=vm-email

# the admin interface via vpn and ports 4444 and 4445

###############################################

if [ "${1}" = "${Guest_name}" ]; then

###############################################

# ONLY EDIT HERE

Guest_ipaddr=192.168.11.2

Host_port=( '4444' '4445' '993' '587' '25' '465' '143' )

Guest_port=( '80' '443' '993' '587' '25' '465' '143' )

###############################################

length=$(( ${#Host_port[@]} - 1 ))

if [ "${2}" = "stopped" -o "${2}" = "reconnect" ]; then

for i in $(seq 0 $length); do

del_prerouting ${Host_port[$i]} ${Guest_ipaddr} ${Guest_port[$i]}

del_forward ${Guest_ipaddr} ${Guest_port[$i]}

#- allows port forwarding from localhost but

# only if you use the ip (e.g http://192.168.1.20:8888/)

del_output ${Host_port[$i]} ${Guest_ipaddr}

done

fi

if [ "${2}" = "start" -o "${2}" = "reconnect" ]; then

for i in `seq 0 $length`; do

add_prerouting ${Host_port[$i]} ${Guest_ipaddr} ${Guest_port[$i]}

add_forward ${Guest_ipaddr} ${Guest_port[$i]}

#- allows port forwarding from localhost but

# only if you use the ip (e.g http://192.168.1.20:8888/)

add_output ${Host_port[$i]} ${Guest_ipaddr}

done

fi

fi

###############################################

# ONLY EDIT HERE

Guest_name=vm-webserver

###############################################

if [ "${1}" = "${Guest_name}" ]; then

###############################################

# ONLY EDIT HERE

Guest_ipaddr=192.168.33.2

Host_port=( '80' '443' '8080' )

Guest_port=( '80' '443' '8080' )

###############################################

length=$(( ${#Host_port[@]} - 1 ))

if [ "${2}" = "stopped" -o "${2}" = "reconnect" ]; then

for i in $(seq 0 $length); do

del_prerouting ${Host_port[$i]} ${Guest_ipaddr} ${Guest_port[$i]}

del_forward ${Guest_ipaddr} ${Guest_port[$i]}

#- allows port forwarding from localhost but

# only if you use the ip (e.g http://192.168.1.20:8888/)

del_output ${Host_port[$i]} ${Guest_ipaddr}

done

fi

if [ "${2}" = "start" -o "${2}" = "reconnect" ]; then

for i in `seq 0 $length`; do

add_prerouting ${Host_port[$i]} ${Guest_ipaddr} ${Guest_port[$i]}

add_forward ${Guest_ipaddr} ${Guest_port[$i]}

#- allows port forwarding from localhost but

# only if you use the ip (e.g http://192.168.1.20:8888/)

add_output ${Host_port[$i]} ${Guest_ipaddr}

done

fi

fi

Troubleshooting Zentyal

Everytime you will alter your network setting in e.g. zentyal and thereby resetting your nat rules, you will need to

- shutdown or save the virtual machines

- restard libvirtd

- import sysctl settings

- reapply all iptables settings with an extra script

Therfore use the script mentioned in the NAT section. There use the given bash script for setting ip-tables.

You can use hooks to do this:

touch /etc/zentyal/hooks/firewall.postservice sudo chmod +x firewall.postservice

#vim /etc/zentyal/hooks/firewall.postservice /bin/bash /home/administrator/bin/kvm/set_iptables_all_guests remove sleep 1 /bin/bash /home/administrator/bin/kvm/set_iptables_all_guests add

Then everytime your firewall is restarted (which is the case for network module too!), your NAT settings will be applied.

sudo service zentyal firewall restart

Restarting libvirt manually

Resting libvirt and setting all things right is done manually with the following script.

#!/bin/bash [ $UID==0 ] || echo "Only run as root" [ $UID==0 ] || exit 1 echo "#############################################################" echo "## IPTABLES NAT" iptables -nvL -t nat /etc/init.d/libvirt-bin restart sleep 15 /home/administrator/bin/kvm/set_iptables_all_guests remove /home/administrator/bin/kvm/set_iptables_all_guests remove /home/administrator/bin/kvm/set_iptables_all_guests add sleep 15 /sbin/sysctl -p /etc/sysctl.conf iptables -A FORWARD -p tcp --tcp-flags SYN,RST SYN -j TCPMSS --clamp-mss-to-pmtu sleep 15 echo "#############################################################" echo "## IPTABLES NAT" iptables -nvL -t nat

NAT rules

View the nat rules with line-numbers: iptables -nvL -t nat --line-number

Delete the PREROUTING rule with the line-number one:

iptables -t nat -D PREROUTING 1

To reach the port inside the port 443 (<port_of_vm> ) inside of the virtual machine with a certain ip (<ip_vm>), do:

iptables -t nat -I PREROUTING -d 0.0.0.0/0 -p tcp --dport <port_of_vm> -j DNAT --to-destination <ip_vm>

Where "1" is the first PREROUTING rule that appears with the above command.

In the above example the first line is the PREROUTING chain with the number "6" and the port 80. This is the FIRST rule.

Another example is to get port 80 traffic running out of a bridged virtual machine with the bridge network 192.168.21.0/24:

iptables -t nat -I premodules -s 192.168.21.0/24 -p tcp -m tcp --dport 80 -j ACCEPT iptables -t nat -D premodules -s 192.168.21.0/24 -p tcp --dport 80 -j ACCEPT

List all rules:

iptables-save

Restore from a file or stdin:

iptables-restore

Restore filtered and commented rules:

comment="my special comment for this test rule"

iptables -A ..... -m comment --comment "${comment}" -j REQUIRED_ACTION

iptables-save | grep -v "${comment}" | iptables-restore

Bridge

Sometimes it is necessary to remove a bridge.

Remove all network interfaces from the bridge:

brctl delif brX ethX

Bring teh bridge down:

ifconfig brX down

Remove the bridge:

brctl delbr brX

Autostart at boot time

Set the 'autostart' flag so the domain is started upon boot time:

virsh autostart myMachine

Shutdown

On Ubuntu 12.04 LTS (Precise Pangolin) the shutdown scripts already take care of stopping the virtual machines (at least in the newest version of the libvirt-bin package). However, by default the script will only wait 30 seconds for the VMs to shutdown. Depending on the services running in the VM, this can be too short.

In this case, you have to create a file /etc/init/libvirt-bin.override with the following content:

> vim /etc/init/libvirt-bin.override # extend wait time for vms to shut down to 4 minutes env libvirtd_shutdown_timeout=240

Backup KVM

Via LVM

- http://pof.eslack.org/2010/12/23/best-solution-to-fully-backup-kvm-virtual-machines

- http://sandilands.info/sgordon/automatic-backup-of-running-kvm-virtual-machines

LiveBackup (under development - --Apos (talk) 18:07, 30 October 2013 (CET))